In a world driven by visuals, deep machine-learning networks are increasingly taking over the role of image recognition on civilian and military systems.

However, their vulnerability to adversarial attacks can cause havoc, city-based scientists said.

Operating out of offices in the Computational and Data Sciences (CDS) building at the Indian Institute of Science (IISc), two floors above a phalanx of Cray supercomputers, a research team under Professor R Venkatesh Babu is involved in creating more robust deep-learning systems that can withstand adversarial attacks.

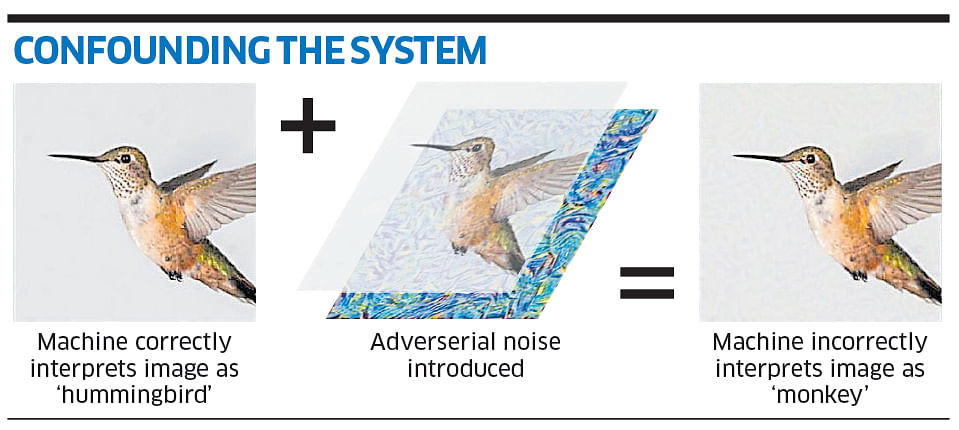

“An adversarial attack is computer-generated noise that can manipulate pictures. The altered image looks virtually indistinguishable from the original to people, but the noise will actually cause the machine-learning model to misinterpret the image,” said Professor Babu.

In a paper currently under review, Professor Babu and his students highlighted an example image of a Hummingbird.

The original picture was accurately identified by the deep-learning system. However, when a deliberately rendered layer of ‘adversarial noise’ was added to the image, the AI interpreted the image as that of a Gibbon monkey. This error was made even though the picture outwardly remained that of a Hummingbird.

“While this may seem like a prank, the implications are significant. In a military scenario, you could have satellite imagery of an enemy airfield for example, which your deep learning model may interpret incorrectly,” Dr Babu explained.

In the civilian market, the deep-learning systems onboard autonomous vehicles that read and identify street signs are also easily compromisable. Accidents can be caused.

The takeaway from these tests is how little noise is needed to fool the system, even though the added noise is not enough to fool people.

India lagging behind

So far, the team has been able to defeat various in-house deep-learning systems with their own ‘adversarial attacks’. Professor Babu likened this to creating computer viruses to test the efficacy of anti-virus systems.

“Through this build-and-attack method, we are working towards creating a more robust system,” he said, but added that India lagged behind other nations.

China has published the highest number of research papers on adversarial attacks in the world, followed by the United States and Europe.

Of about 1,000 papers published worldwide on the matter, about 30% are from China. India’s share amounts to less than 20 papers.